About a month ago, Israel-based Corsight AI began offering its global clients access to a new service aimed at rooting out what the retail industry calls “sweethearting,”—instances of store employees giving people they know discounts or free items.

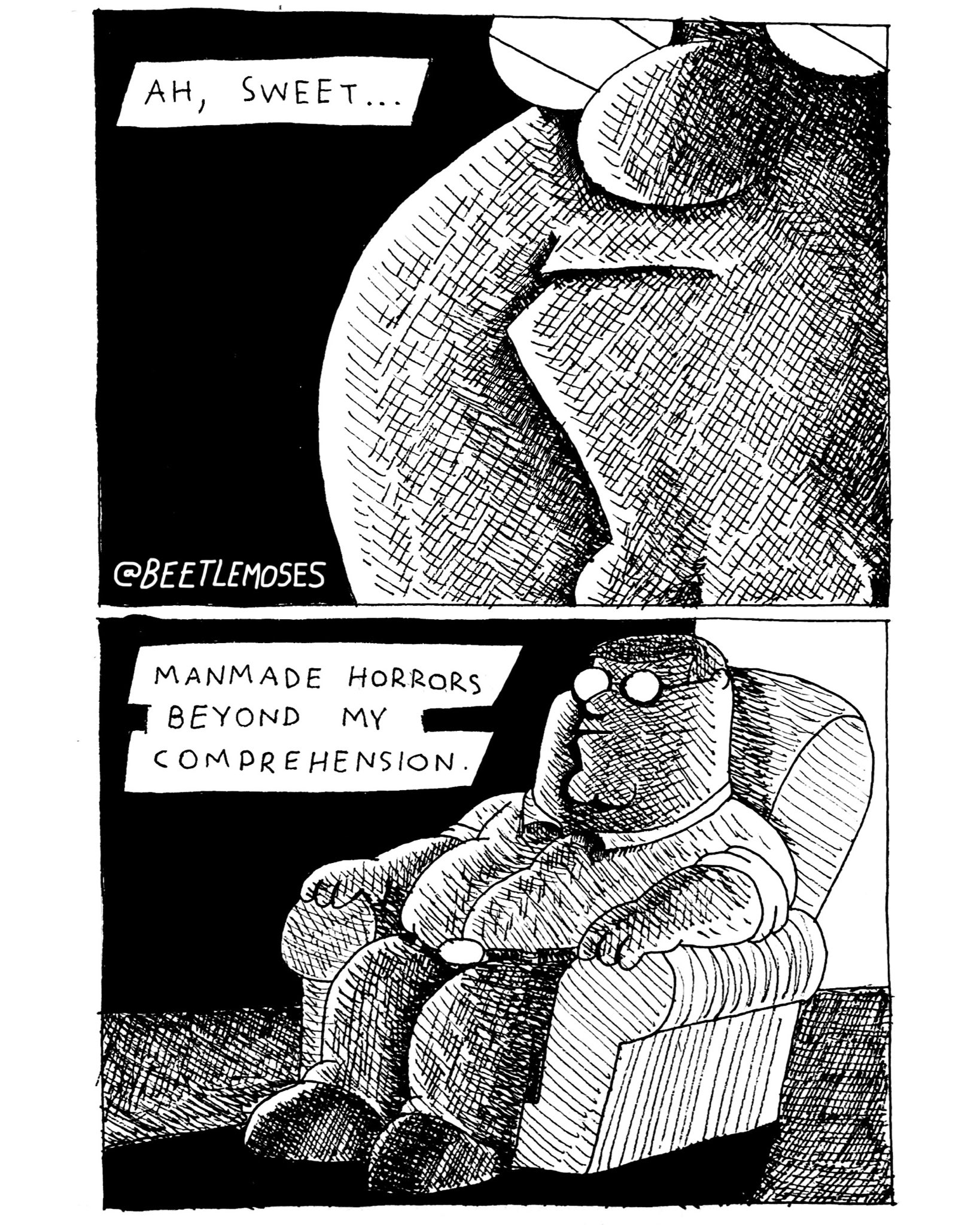

Lol, I hope stores that use this lose millions on this stupid ass privacy invasion. Anyone stupid enough to believe the savings of catching a 10% employee discount used occasionally for friends or whatever is going to offset whatever the fuck this most recent torment nexus is going to cost frankly deserves to be swindled.