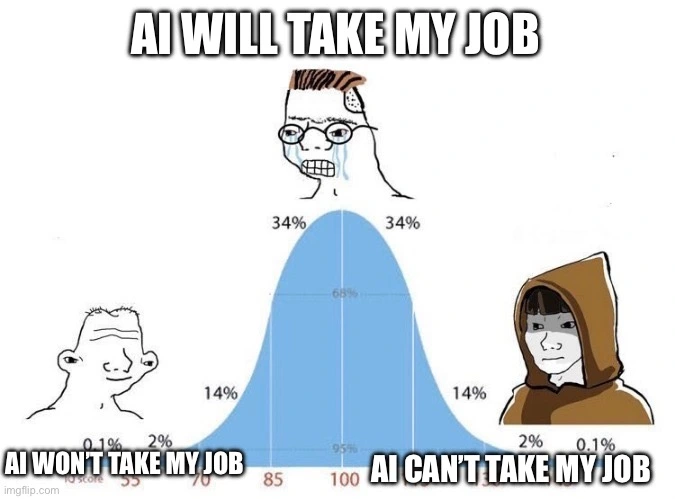

I think AI can take far fewer jobs than people will try to replace with AI, that's kind of the issue

memes

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

High skilled jobs will just start using AI as a tool to automate routine (or have already started, in some cases). The most efficient use of AIs we have now is to pair it with a human, anyway

The worry is focused on the amount of damage that is likely to be done by the people in decision-making positions thinking they can save money by removing more paid positions.

Companies will save so much money once they decide to replace their CEOs with AIs..

Tbf most could do it for cheaper with a dartboard and some post-its

I never understood this? How could the CEO be replaced? Who would be controlling the AI? Whould't that person just be the new CEO? I have so many questions...

The shareholders would do a ‘Twitch plays’ on the ai

If you are trying to seriously understand how to do it... well, you can't. Current AIs can't fully replace anybody, and it's an open question if they can partially replace (AKA improve the productivity) anybody to any impactful extent.

Depending on how loosely you define AI, current AIs are great at replacing warehouse workers and jobs that rely heavily on routine and have little to no innovation and critical thinking involved.

Fire all staff

Receive billion dollar check

Walk away before it all collapses

Repeat

Look, I already got the algorithm written right here!

The problem with humans reviewing AI output is that humans are pretty shit at QA. Our brains are literally built to ignore small mistakes. Digging through the output of an AI that's right 95% of the time is nightmare fuel for human brains. If your task needs more accuracy, it's probably better to just have the human do it all, rather than try to review it.

Then each QA human will be paired with a second AI that will catch those mistakes the human ignores. And another human will be hired to watch that AI and that human will get an AI assistant to catch their mistakes.

Eventually they'll need a rule that you can only communicate with the human/AI directly above you or below you in the chain to avoid meetings with entire countries of people.

Should note that a lot of the Microsoft Recall project revolves around capturing human interactions on the computer in real time continuously, with the hope of training a GPT-5 model that can do basic office tasks automagically.

Will it work? To some degree, maybe. It'll definitely spit out some convincing looking gibberish.

But the promise is to increasingly automate away office and professional labor.

"Take this code and give me jest tests with 100% coverage. Don't describe, don't scaffold, full output."

Saves me hours.

Oh, don't worry, the errors you see will go away quickly, assuming they aren't a feature.

Basically it is going the following way:

- Company gets AI to do stuff.

- Company fires its workforce.

- AI isn't up to the task, and often disliked by people, see its unpopularity in the arts.

- Company has to rehire staff, first to try to salvage the AI's output, then to just go back to the good old days of human creativity.

AI isn't magic, no matter how much techbros try to humanize the technology because NeuRAl nEtWOrKs.

How about:

Company rehires a percentage of its workforce, with the lowered demand for those specific workers driving salaries down.

Do you mean AI, just Generative models, or LLMs in particular? I'm pretty thoroughly convinced that AI is a general solution to automation, while generative models are only a partial but very powerful solution.

I think the larger issue is actually that displacement from the workforce causes hardship to those who have been displaced. If that were not the case, most people either wouldn't care or would actively celebrate their jobs being lost to automation.

I was really confident. Then I lost a job to AI. Then they hired me back a few months later after realizing that replacing half the support team with an AI was not working out.

How did your compensation change when you were rehired?

Rehired with all my previous tenure benefits with the added raise they would have given me had I been around when they gave out raises.

So your compensation effectively didn't change at all, if you'd have gotten the raise anyhow?

Damn.

I was in a very, very rough spot. Was mostly worth taking the offer. It sure beat wasting 13 years of obscure product knowledge at some new job for the less pay others were offering.

I hope you at least gave them a nice smug smile when you walked back in.

I got to make regular jokes about "being the new guy" and subtly shoot shade at the management team any chance I get.

Human Inteligence 'Bob' checking in

Hopefully at least farts outside the CEO's office every time they walk by.

Yeah that's totally understandable. It's just so scummy that suits know they can fire people for some idiotic whim like the current "AI" craze, and then when it inevitably blows up in their faces they can rehire the folks they just fired and for no extra cost because they know people will be desperate. Small wonder they didn't cut your pay.

My first sentence when I get connected to a chat bot is always "Let me speak to a human".

This was exactly my experience. Freaked myself out last year and decided best thing was to dive headfirst into it to figure out how it worked and what it's capabilities are.

Which - it has a lot. It can do a lot, and it's impressive tech. Coded several projects and built my own models. But, it's far from perfect. There are so so so many pitfalls that startups and tech evangelists just happily ignore. Most of these problems can't be solved easily - if at all. It's not intelligent, it's a very advanced and unique prediction machine. The funny thing to me is that it's still basically machine learning, the same tech that we've had since the mid 2000s, it's just we have fancier hardware now. Big tech wants everyone to believe it's brand new... and it is... kind of. But not really either.

The funny thing to me is that it’s still basically machine learning, the same tech that we’ve had since the mid 2000s, it’s just we have fancier hardware now.

So much of the modern Microsoft/ChatGPT project is effectively brute-forcing intelligence from accumulated raw data. That's why they need phenomenal amounts of electricity, processing power, and physical space to make the project work.

There are other - arguably better, but definitely more sophisticated - approaches to developing genetic algorithms and machine learning techniques. If any of them prove out, they have the potential to render a great deal of Microsoft's original investment worthless by doing what Microsoft is doing far faster and more efficiently than the Sam Altman "Give me all the electricity and money to hit the AI problem with a very big hammer" solution.

It takes a lot of energy to train the models in the first place, but very little once you have them. I run mixture of agents on my laptop, and it outperforms anything openai has released on pretty much every benchmark, maybe even every benchmark. I run it quite a bit and have noticed no change in my electricity bill. I imagine inference on gpt4 must almost be very efficient, if not, they should just switch to piping people open sourced llms run through MoA.

Are you saying you have a local agent that is better than anything OpenAI has released? Where did this agent come from? Did you make it from scratch? How are you not worth billions if you can out perform them on "every benchmark"?

My dude, no, I'm not the creator, settle down. Mixture of agents is free and open to anyone to use. Here is a demo of it by Matthew Berman. It isnt hard to set up.

Believe it or not, openai is no longer making the best models. Claude Sonnet 3.5 is much better than openai's best models by a considerable amount.

It's not exactly the same tech but it's very similar. The Transformer architecture made a big difference. Before that we only had LSTMs which do sequence modelling in a different way that made far back things influence the result less.

It'll take a few spectacular failures and bankruptcies before people figure out AI isn't quite what's being sold to them, I feel.

By then the startup ceos will have made their money and ran though. Just like Blockchain

Have you coded with Claude Sonnet 3.5 yet? It is mind-blowingly better than Opus 3, which was already noticeably better than anything openAI has put out yet. Gpt 4 was nice to code with, but this is on a whole other level. I can't imagine what Opus 3.5 will be able to do.

The issue with sonnet 3.5 is, in my limited testing, is that even with explicit, specific, and direct prompting, it can't perform to anything near human ability, and will often make very stupid mistakes. I developed a program which essentially lets an AI program, rewrite, and test a game, but sonnet will consistently take lazy routes, use incorrect syntax, and repeatedly call the same function over and over again for no reason. If you can program the game yourself, it's a quick way to prototype, but unless you know how to properly format JSON and fix strange artefacts, it's just not there yet.

Which is why as an engineer I can either riddle with a prompt for half an hour... Or just write the damn method myself. For juniors it's an easy button, but for seniors who know how to write these algorithms it's usually just easier to write it up. Some nice starter code though, gets the boilerplate out of the way

Yeah. It's really interesting because juniors and hobbyist are the ones getting used to how to interact with it. Since it is rapidly improving, it won't be long until it will outpace the grunt work ability of seniors and the new seniors will be the ones willing and able to use it. Programming is switching away from being able to write tedious code and into being able to come up with ideas and convey them clearly to an llm. There's going to be a real leveling of the playing field when even the best seniors won't have any use for most of their grunt work coding skills. The jump up from Opus 3 to Sonnet 3.5 is absolutely insane, and Opus 3.5 should be here before too long.

That's really interesting. For android studio it's been absolutely crushing it for me. It's taken some getting used to, but I've had it build an app with about 60 files. I'm no master programmer, but I've been a hobbyist for a couple decades. What it's done in the last 5 days for me would have taken me 2 months easy, and there's lots of extra touches that I probably wouldn't have taken time to do if it wasn't as simple as loading in a few files and telling it what I want.

Usually when I work on something like this, my todo list grows much faster than my ability to actually put it together, but with this project I'm quickly running out of even any features that I can imagine. I've not had any of the issues of it running in circles like I would often get it gpt4.

How about anyone whose job is taken by AI gets a universal basic income paid for by taxes on those companies

I think that is what we all (except HR and billionaires) agree on.

It's such a shame that those are the exact people who have the power in this situation

There's a pretty good argument for that in a lot of other cases too, however those on the top doesn't want it, or at least not in a sensible way, see the whole "UBI through stock exchange" and "Universal Basic Compute" fiasco.

Please take my job I wish to stop suffering