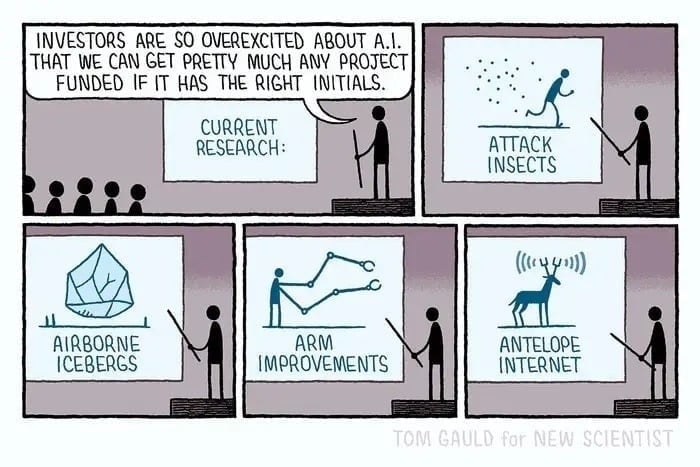

As a fervent AI enthusiast, I disagree.

...I'd say it's 97% hype and marketing.

It's crazy how much fud is flying around, and legitimately buries good open research. It's also crazy what these giant corporations are explicitly saying what they're going to do, and that anyone buys it. TSMC's allegedly calling Sam Altman a 'podcast bro' is spot on, and I'd add "manipulative vampire" to that.

Talk to any long-time resident of localllama and similar "local" AI communities who actually dig into this stuff, and you'll find immense skepticism, not the crypto-like AI bros like you find on linkedin, twitter and such and blot everything out.