Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

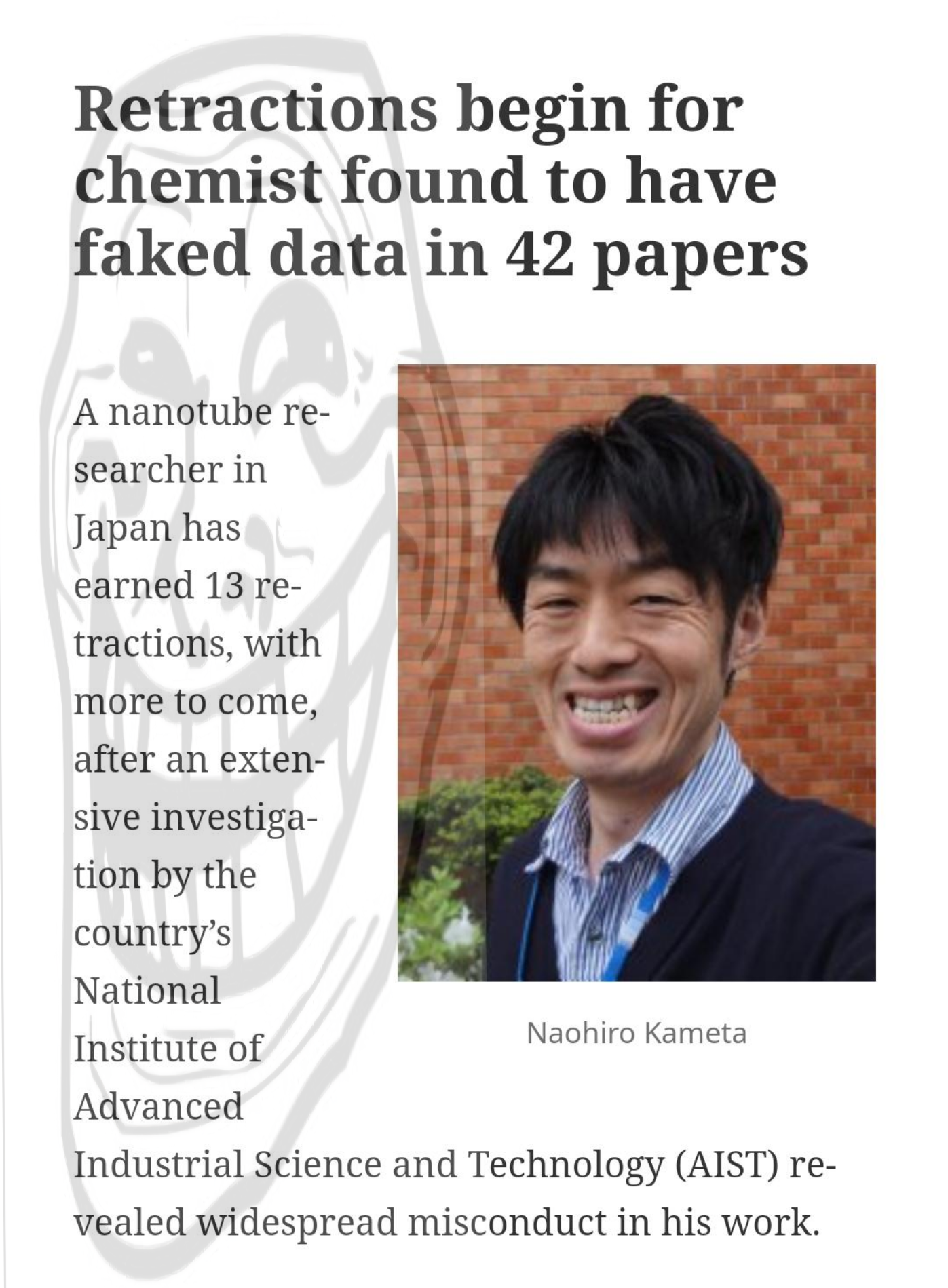

I look forward to 6 hours of BobbyBroccoli videos about this.

I love his videos. I found him one lacy Sunday afternoon bing watching his Schön videos. He and many more are my reason for Nebula.

I, too, spend my Sunday afternoons draped in lace.

Ewww - the whole point of peer review is to catch this shit. If peer review isn't working, we should be going back to monographs :)

I disagree there - peer review as a system isn't designed to catch fraud at all, it's designed to ensure that studies that get published meet a minimum standard for competence. Reviewers aren't asked to look for fake data, and in most cases aren't trained to spot it either.

Whether we need to create a new system that is designed to catch fraud prior to publication is a whole different question.

Whether we need to create a new system that is designed to catch fraud prior to publication is a whole different question

That system already exists. It's what replication studies are for. Whether we desperately need to massively bolster the amount of replication studies done is the question, and the answer is 'yes'.

But that's not S E X Y! We need new research, to earn grants and subsidize faculty pay!

An institute for reproducibility would be awesome

Agree! Maybe efforts spent working on projects assigned from the IFR would be rewarded with grant funds or grant extensions for novel projects.

We could award a certain percentage of grants and grad students should be able to get degrees doing replication studies. Unfortunately everyone is chasing total paper count and impact factor rankings and shit.

Maybe we should consider replication studies to be "service to the community" when judging career accomplishments. Like, maybe you never chaired a conference but you published several replication studies instead. You could get your Masters students and/or undergrads to do the replications. We'd need journals that focus on replication studies, though.

Nah. Enough of this service to community stuff. It always ends up meaning us doing more work for free that someone else profits from. It should be incentiviced with grant funds. Studies I would want to make sure undergo replication are industry sponsored. Industry sponsored studies should have to pay into a pool and certain studies would be selected for replication analysis with these funds.

Yeah, reviewing is about making sure the methods are sound and the conclusions are supported by the data. Whether or not the data are correct is largely something that the reviewer cannot determine.

If a machine spits out a reading of 5.3, but the paper says 6.2, the reviewer can't catch that. If numbers are too perfect, you might be suspicious of it, but it's really not your job to go all forensic accountant on the data.

You're conflating peer review and studies that verify results. The problem is that verifying someone else's results isn't sexy, doesn't get you grant money, and doesn't further your career. Redoing the work and verifying the results of other "pioneers" is important, but thankless work. Until we insensitivise doing the boring science by funding all fundamental science research more, this kind of problem will only get worse.

Everyone laughing about troll physics, this guy did troll chemistry. Nobody's laughing now.

Did he work with copper nanotubes, perhaps?

I'm getting the impression he worked with brass balls

Probably not a good idea to phrase it as "earned" retractions.

It shouldn't be a competition to see who is the worst.

Respect is earned, not given 😤

悩み?

Dude even fakes his smile