this post was submitted on 14 May 2024

311 points (91.2% liked)

Programmer Humor

32461 readers

435 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

So many people here explaining why Python works that way, but what's the reason for numpy to introduce its own boolean? Is the Python boolean somehow insufficient?

From numpy's docs:

and likewise:

here’s a good question answer on this topic

https://stackoverflow.com/questions/18922407/boolean-and-type-checking-in-python-vs-numpy

plus this is kinda the tools doing their jobs.

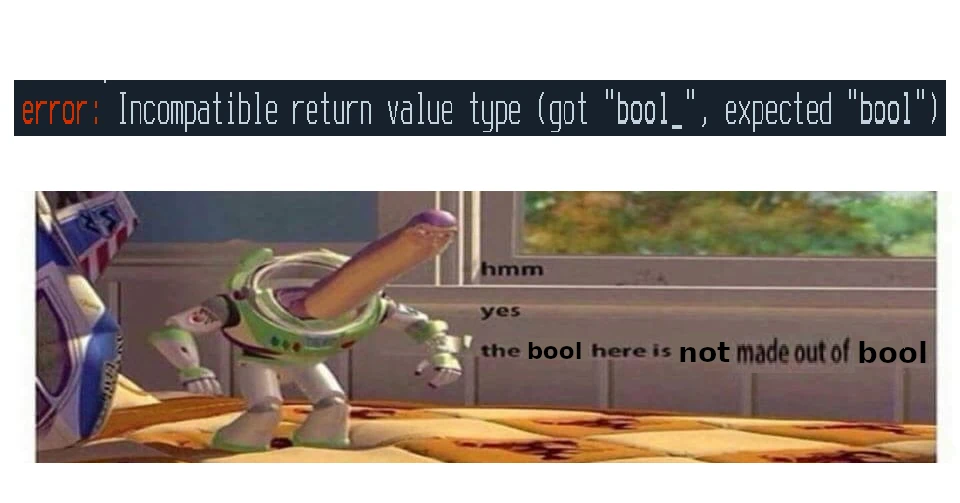

bool_exists for whatever reason. its not aboolbut functionally equivalent.the static type checker mpy, correctly, states

bool_andboolaren’t compatible. in the same way other type different types aren’t compatibleTechnically the Python bool is fine, but it's part of what makes numpy special. Under the hood numpy uses c type data structures, (can look into cython if you want to learn more).

It's part of where the speed comes from for numpy, these more optimized c structures, this means if you want to compare things (say an array of booleans to find if any are false) you either need to slow back down and mix back in Python's frameworks, or as numpy did, keep everything cython, make your own data type, and keep on trucking knowing everything is compatible.

There's probably more reasons, but that's the main one I see. If they depend on any specific logic (say treating it as an actual boolean and not letting you adding two True values together and getting an int like you do in base Python) then having their own also ensures that logic.

This is the only actual explanation I've found for why numpy leverages its own implementation of what is in most languages a primitive data type, or a derivative of an integer.

You know, at some point in my career I thought, it was kind of silly that so many programming languages optimize speed so much.

But I guess, that's what you get for not doing it. People having to leave your ecosystem behind and spreading across Numpy/Polars, Cython, plain C/Rust and probably others. 🫠

Someone else points out that Python's native

boolis a subtype ofint, so adding aboolto anint(or performing other mixed operations) is not an error, which might then go on to cause a hard-to-catch semantic/mathematical error.I am assuming that trying to add a NumPy

bool_to anintcauses a compilation error at best and a run-time warning, or traceable program crash at worst.