this post was submitted on 28 Apr 2024

503 points (96.8% liked)

Science Memes

10950 readers

2097 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

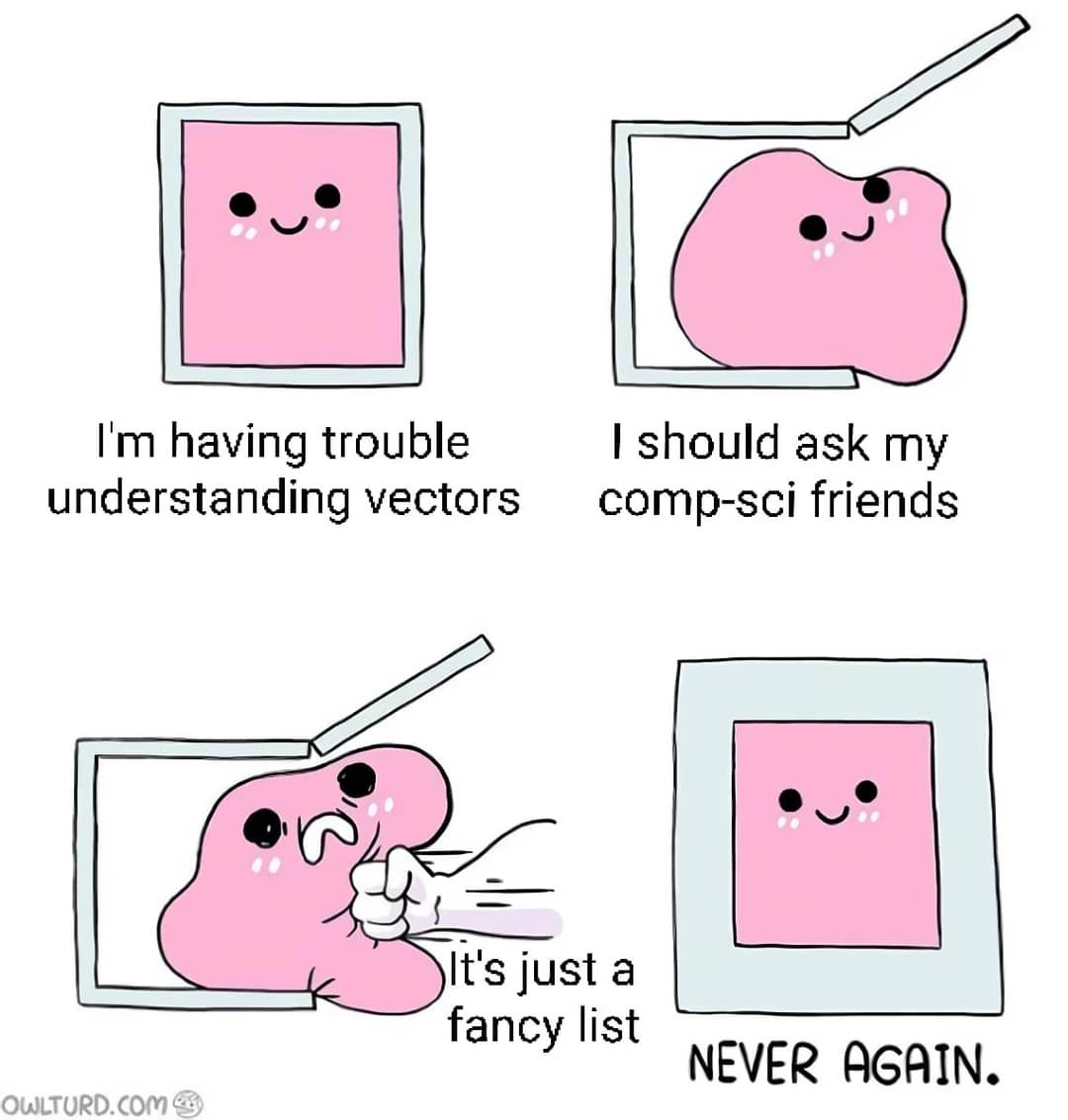

It's like a fancy list.

So is a wedding gift registry.

No, this is Patrick!

dynamically-sized: The size of it can change as needed.

list: It stores multiple things together.

object: A bit of programmer defined data.

of the same type: all the objects in the list are defined the same way

stored contigiously in memory: if you think of memory as a bookshelf then all the objects on the list would be stored right next to each other on the bookshelf rather than spread across the bookshelf.

Dynamically sized but stored contiguously makes the systems performance engineer in me weep. If the lists get big, the kernel is going to do so much churn.

Contiguous storage is very fast in terms of iteration though often offsetting the cost of allocation

Modern CPUs are also extremely efficient at dealing with contiguous data structures. Branch prediction and caching get to shine on them.

Avoiding memory access or helping CPU access it all upfront switches physical domain of computation.

Which is why you should:

Vecdoubles in size every time it runs out of space)Memory is fairly cheap. Allocation time not so much.

matlab likes to pick the smallest available spot in memory to store a list, so for loops that increase the size of a matrix it's recommended to preallocate the space using a matrix full of zeros!

Is that churn or chum? (RN or M)

Churm

Many things like each other lined up in a row, and you can take some away or put more in.

It's how you want an array to work.