I’m very happy to see that the industry has moved away from the blockchain hype. AI/ML hype at least useful even if it is a buzzword for most places

Programmer Humor

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

So true.

With LLMs, I can think of a few realistic and valuable applications even if they don’t successfully deliver on the hype and don’t actually shake the world upside down. With blockchain, I just could never see anything in it. Anyone trying to sell me on its promises would use the exact words people use to sell a scam.

Blockchain is a great solution to a almost nonexistent problem. If you need a small, public, slow, append only, hard to tamper with database, then it is perfect. 99.9% of the time you want a database that is read-write, fast and private.

My thoughts exactly.

I was told that except for flying scams under regulatory radars, the thing it’s great at is low-trust business transactions. But like, there are so many application-level ways to reasonably guarantee trust of any kind of transaction for all kinds of business needs, into a private database. I guess it would be an amazing solution if those other simpler ways didn’t exist!

We're currently adding AI support to our platform for email marketing and it's crazy what can be done. Whole campaigns (including links to products or articles) made entirely by GPT-4 and Stable Diffusion. You just need to proofread it afterwards and it's done. Takes 15 minutes tops (including the proofreading).

takes 15 minutes tops

Not including the hours dicking around with prompts.

Only charlatans were recommending blockchain for everything. It was painfully obvious how inefficiently it solved a non-existent problem.

You could always tell because no one could ever really explain it in simple terms what it does or why it was useful, other than trying to defend NFTs existing and enjoying the volatility of the crypto market (not currency).

The biggest problem is that even OP is unaware of what is really being skipped: math, stats, optimization & control. And like at a grad level.

But hey, import AI from HuggingFace, and let's go!

The worst part of ML is Python package management

Yeah, I feel like Python is partly responsible for most of this meme. It's easy for very simple scripts and it has lots of ML libraries. But all the stuff in between is made more difficult by the whole ecosystem being focused on scripting...

Why walk when you can learn to run!?

Where's discrete mathematics?

Math? I write code, that's words bro. Why would I ever need math?

Shhh, they’re trying to be discreet about discrete mathematics

On the first floor

I've been programming for like 5 years now and never even attempted anything AI/ML related

Same. I know my limits lol

Unless you're dead set on doing everything yourself, it's pretty easy to get into.

Get to it then

Going to be starting computer science in a few weeks. I feel like AI/ML is something you want an experienced teacher for instead of botching something together

That username does not check out.

This kind of vibe is becoming actually scary from a "no one knows how X actually works, but they are building things that might become problematic later" headspace. I am not saying that everyone needs to know everything. But one really really bad issue I see while fixing people's PCs is that a shocking amount of high school and college aged folks are really about media creation and/or in comp sci majors. However they come to me with issues that make me question how they are able to function in knowing so many things that all involve computers, but not the computers themselves.

These next paragraphs are mostly a rant about how the OSes are helping make the issue grow with all users and not just the above. Also more ranting about frustration and concern about no one caring about fundamentals of how the things they make their stuff on function. Feel free to skip and I am marking as a "spoiler" to make things slightly less "wall of text".

spoiler

Some of it is the fault of the OSes all trying to act like smartphone OSes. Which do everything possible to remove the ability to really know where all your actual data is on the device. Just goes on there with a "trust me bro, I know where it is so you don't need to" vibe. I have unironically had someone really really need a couple of specific files. And their answer to me when I asked if they knew where they might be saved was "on the computer." Which was mildly funny to see them react when my face led to them saying "which I guess is beyond not helpful." I eventually convinced him to freaking try signing into OneDrive like I had told him to do while I checked his local drive files. Which turns out it was not on the PC but in fact OneDrive. That was a much more straight forward moment. Microsoft tricking people into creating Microsoft Accounts and further tricking them into letting OneDrive replace "Documents", "Desktop", and "Pictures" local folders at setup is a nightmare when trying to help older folks (though even younger folks don't even notice that they are actually making a Microsoft Account either). Which means if I just pull a drive out of a not booting computer those folders don't exist in the User's folder. And if the OneDrive folder is there, the data is mostly just stubs of actual files. Which means they are useless, and can be bad if the person only had a free account and it got too full and there is now data that may be lost due to those folders not "really" being present.

They know how to use these (to me) really complicated programs and media devices. They know how to automate things in cool ways. Create content or apps that I will just never wrap my mind around. So I am not over here calling them stupid and just "dunking" on them. But they don't care or just refuse to learn the basic hardware or even basic level troubleshooting (a lot is just a quick Google search away). They know how to create things, but not ask how the stuff that they use to create things works. So what will happen when the folks that know how things work are gone and all people know is how to make things that presuppose that the other things are functioning? All because the only things that get attention are whatever is new and teaching less and less the foundations. Pair that with things being so messed up that "fake it till you make it" is a real and honest mantra and means only fools will give actual credentials on their resumes.

It is all about getting a title of a job, without knowing a damn thing about what is needed to do the job. It also means so many problems that were solved before are needing to be re-solved as if it was brand new. Or things that were already being done are "innovated" by people with good BS-ing skills in obtuse ways that sound great but just add lots of busy work. To which the next "innovator" just puts things back to before and are seen as "so masterful." History and knowing how things work currently matter in making real advancements. If a coder just learns to always use functions or blobs of other projects without knowing what is in them. Then they could base basically everything on things that if are abandoned or purged will make their things no longer work.

Given how quickly "professionals" from so so many industries are just simply relying on these early AI/MLs without question. They don't verify if the information they got was factually true and can be cited from real sources. Instead of seeing that the results were made from the AI/MLs doing shit they have been taught to do. Which is to try and create things based on the "vibe" of actual data. The image generators are all about the attempts to take random prompts and compare to actual versions of things and make something kind of similar. But the text based ones are treated so differently and taken at a scary level of face value and trusted. And it is getting worse with so many "trusted" media outlets beginning to use these systems to make articles.

Object-oriented programming is a meme, if you can't code it in HolyC you don't need it

If only TempleOS supported TCP/IP. Luckily there is a fork called Shrine that supports TCP/IP so bringing Lemmy there would be probably doable.

Not sure, OOP should come before data structures and algorithms...

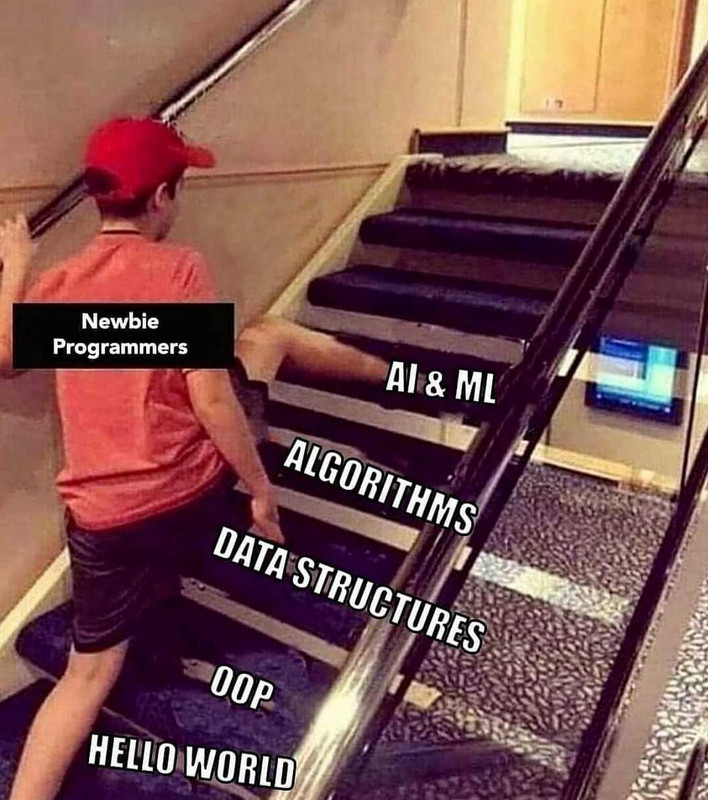

It does. But the meme is showing the newbie skip over everything and go straight to ML

yeah, it should be the other way around, else OOP becomes too abstract to really understand

And the handrails are YouTube.

Wouldn’t be surprised if now the steps are code and instructions provided by ChatGPT.

I fully understand why people would wanna skip all this stuff, but just learn html and css instead of programming at that point lol. I'd know, that's what I did...

What actually is supposed to be the ideal way to learn? Say, for someone trying to be a sysadmin

Here’s a nickel kid. Get yourself a better computer.

If you want to be a sysadmin learn Linux/Unix. Basic bash scripting might be useful down the line to help understand a bit of what’s going on under the hood.

IMHO networking would probably be a better secondary place to focus for a sysadmin track rather than OOP concepts, algorithms etc.

There isn't a singular "right way", but you need to know the basics of computer science like OOP, algorithms, and data structures if you want to be a decent programmer. Everyone has their own advice, but here's mine for whatever it's worth.

If you want to be a sysadmin, you should learn command line languages like batch, sed, and bash (or a superset language like batsh). Start simple and don't overwhelm yourself, these languages can behave strangely and directly impact your OS.

When you have a basic grasp on those languages (don't need to get too complex, just know what you're doing on the CLI), I'd recommend learning Python so you can better learn OOP and study networking while following along with the flask and socket libraries. The particular language doesn't matter as much as the actual techniques you'll learn, so don't get hung up if you know or want to learn a different language.

Finally, make sure you understand the hardware, software, and firmware side of things. I'd avoid compTIA certs out of principle, but they're the most recognizable IT certification a person can get. You need to have some understanding of operating systems, and need to understand how to troubleshoot beyond power cycling

There is a website called roadmap.sh which has both Skill and Role based roadmaps to learn how to program. There is no actual “SysAdmin” role path since our job can technically have several routes by itself.

I personally use Debian at my org, and found Python and Bash enough to automate small things that need to be done in a regular basis.

But if for example, you were a Windows SysAdmin you’d have to learn to use PowerShell ~ or VBS (idk if those scripts are still a thing)~ .

Windows sys admin here, I haven't seen a vbs script in ages. I'm primarily in PowerShell these days.

PrivateGPT + CS books = ask books questions while self learning?

The issue with that is that LLMs tend to lie when they dont know something. The best tool for that is stackoverflow, lemmy, matrix, etc.

Yeah, and they don't just lie. They lie extremely convincingly. They're very confident. If you ask them to write code, they can make up non existent libraries.

In theory, it may even be possible to use this as an attack vector. You could ask an AI repeatedly to generate code and whenever it hallucinates, claim that package for yourself with a malicious package. Then you just wait for some future victim to do the same.